The 28th BMVA Computer Vision Summer School took place at Aberdeen University between 7th and 11th July. It was a very exciting week full of great lectures and unexpectedly warm weather.

The location was quite convenient for me, and I got to enjoy a 3-hour direct train journey along the east coast. To make things less smooth, the confusion started as soon as I got off the train. Aberdeen has no Uber (learned the hard way), and it turns out, if you want to take a taxi from the train station, you just have to wait for one to show up (like a bus). Once I found myself a taxi driver, a lovely lady who gave me a full history of Aberdeen along with some sightseeing tips, a short trip to Hillhead student village followed.

The lectures

In total, there were 16 lectures covering a broad spectrum of computer vision topics. Each talk was 90 minutes long, so there was a lot of information to process during and after the week.

Monday

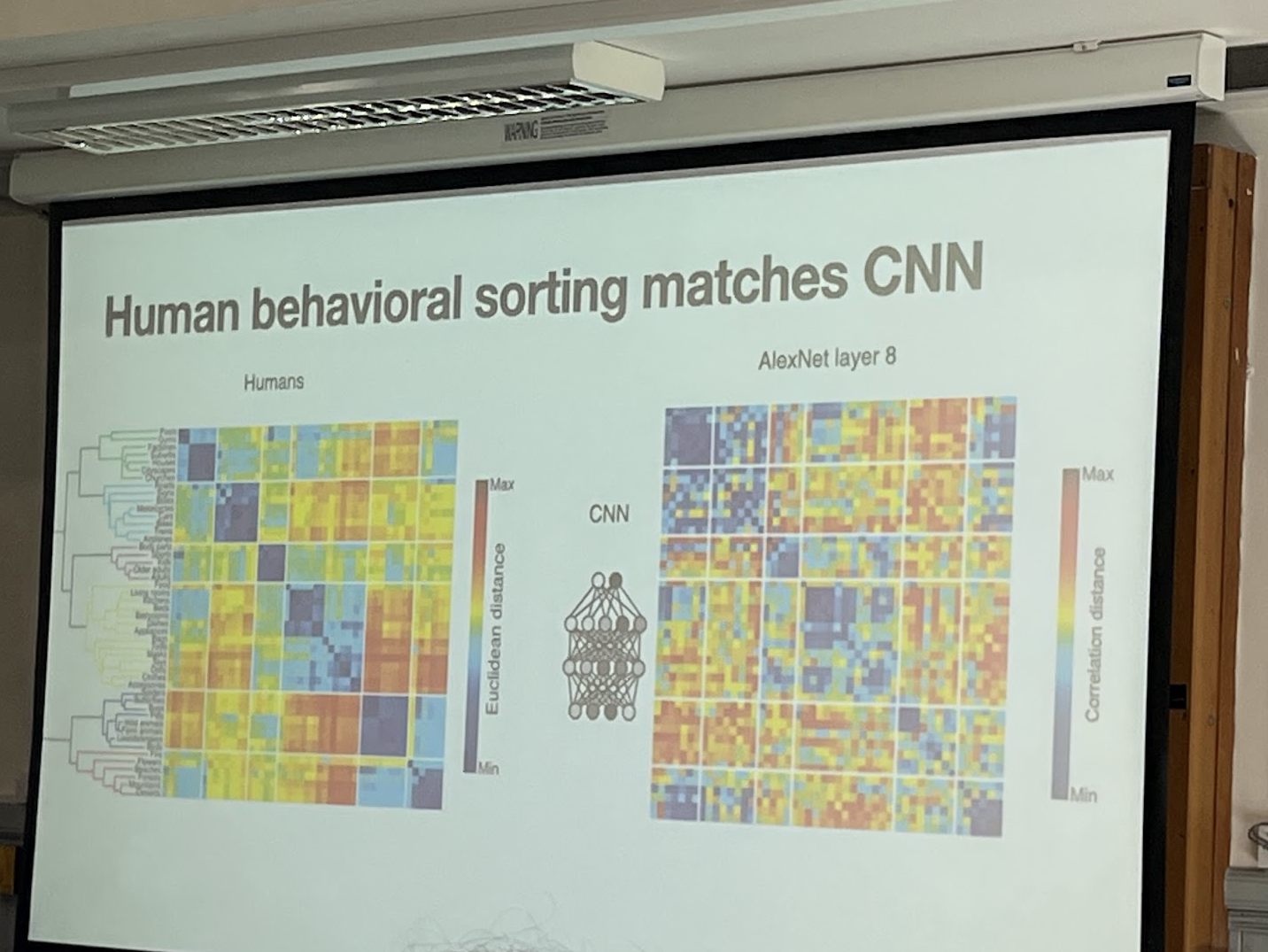

The opening talk was by Iris Groen on Human vs. Machine vision. This was one of my favourite talks; it was focused on neuroscience and showed how computer vision took inspiration from human vision. First, we learned about how our vision works and how the brain processes visual information. There was a comparison of CNNs and human image processing: both of these seem to map information hierarchically and even sort images into similar categories!

The second talk on Monday was by Andrew French on Classical computer vision. I find it fascinating that you can achieve quite a lot even without deep learning. Of course, the modern convolutional networks greatly advanced CV, but it was fun to learn about what people did before (I’m saying this as if the old methods were created 200 years ago).

Tuesday

The second day was heavily focused on video analysis and various use cases. Tuesday talks were opened by Michael Wray and his lecture on Egocentric vision. First-person perspective videos have many uses and can often capture the task better. Third-person angle needs extra information; first-person view can be captured with one camera. Although egocentric vision captures a natural view of the world, it somehow felt less natural to watch compared to a standard video. However, many robotics tasks require training data of this kind. Michael talked about how his research group created a massive ego-centric vision dataset, working with many volunteers, filming all their kitchen endeavors. The end of the talk touched on ethics and privacy. We had a vote on how many people are okay to be filmed vs have their health data shared. To my surprise, being filmed was perceived much more negatively than sharing health data.

The second talk was on CLIP for video analytics by Victor Sanchez. Moving on from a single camera to using multiple cameras for pedestrian tracking and predicting on which camera the person will appear next. Some very impressive results were presented. For example, in an experiment with multiple cameras covering corridors at a university, the new method was able to accurately predict which direction the person was walking and which camera would see them next.

The third talk was by Andrew Gilbert: From paragraphs to pixels: LLMS in video understanding. The goal was to create a model that can be used for audio description for movies. This is obviously a very useful research area as many movies are missing this, leaving visually impaired people with fewer choices. However, there is nuance to this task. Audio description is not simply describing what is happening on the screen. It needs to be short to integrate between the dialogue, retain context throughout the movie, and some amount of art is required to produce a proper cinematic experience. The models have been able to learn some of these traits already, but long-term memory and focus on the most important things are still a challenge.

Oisin Mac Aodha closed the day with self-supervised learning. For me, this was a very useful talk as I often struggle to get enough labeled data despite having an abundance of images. It gave me ideas for new things I could try in my project to tackle this problem and make use of all the data I have available.

Wednesday

Wednesday is when things started to get mathsy. The morning talk was by Neil DF Campbell on Uncertainty and evaluation of computer vision. I think this is super important for any computer vision project, and I really enjoyed seeing stats again! The talk was extremely well delivered and remained engaging despite being 90 minutes of maths first thing in the morning.

The second half of the morning was dedicated to a Pytorch tutorial with 4 collab notebooks available to introduce and explain how all the deep learning maths is translated to code. I wasn’t too excited about this one at first, but it ended up being very interesting. It’s quite easy to get comfortable with using all the ML libraries and not necessarily understand how they work. I have more insight into Pytorch now (even if I struggled to remember how to differentiate).

The afternoon started with Paul Henderson and his talk Structured generative models for computer vision. This talk made me realize GANs are quite fascinating, but I am still puzzled how we can start with some random noise and get a nice image as a result. It does feel like magic.

The final talk of the day was Elliot J. Crowley- Efficient models for computer vision. Again, a talk I think applies to nearly everyone, trying to demonstrate that having billions of parameters in NNs does not always have to be the right way. I aspire to be as relaxed and comfortable with presenting as Elliot seems to be.

Thursday

As the week progressed, the topics were going from broad to more specific. Thursday started with CV in robotics and SLAM by Mike Mangan. This talk was industry-geared, compared to all the other ones. We got introduced to Opteran and their development of natural intelligence. One of the products Opteran makes is robots for warehouses. They draw inspiration from nature and mainly insects for implementing various components. This way, the robot can robustly navigate the ever-changing warehouse environment and not get confused. The talk featured a live demo, which understandably sparked joy in everyone. It was super impressive too!

This presentation was followed by Armin Mustafa with 4D machine perception of complex scenes. One of the research areas is personalised media. The content can be made flexible in real time, making it more accessible for people with disabilities or displaying the most relevant information. You could manipulate how loud certain components are, get personalised summaries of movies/series you missed, or decide how things look. Another use of 4D perception is for augmented reality and storytelling.

The third talk on Thursday was by Tolga Birdal – Shape shifters: Geometry, topology and learning for beyond computer vision. I’ve always been fascinated by the inner workings of machine learning and neural networks and the underlying maths concepts. Tolga is a fantastic and engaging speaker, but I’ve not seen proper maths in so long that it wasn’t the easiest material to process. That’s not to say I didn’t like the talk, I would really like to find some time to learn about all the mathsy things in ML (again). The most fun takeaway: people at some point believed that geometry was not good for you, i’m sure many student will agree.

In the last talk of the day, Binod Bhattarai presented on trustworthy AI in medical imaging. It was cool to see how successful some applications of CV in medical settings have been. However, medical data is very sensitive and comes with challenges when it comes to handling it. Here, a method called federated learning can be used to create collaborative machine learning without data sharing. Besides the privacy issue, another big challenge is classifying something rare that doesn’t appear in the datasets. Out of distribution detection is a great concept, and also shows AI is more of a tool to be used rather than fully replace someone’s job.

Friday

Friday was the last day of summer school, and the final talks were:

Pascal Mettes – Hyperbolic and hyperspherical deep learning. Euclidean geometry is dominant in schools, computers, and deep learning tools. But the world is not always Euclidean. For example, to do representation learning, we need hierarchical geometry. Image and text embeddings are unequal, but by using hyperbolic entailments, we can model this imbalance.

The closing talk was by Oya Celikutan – Multimodal human behavior understanding and generation for human-robot interaction. She demonstrated how many aspects need to be considered to create a robot capable of socially aware navigation. For example, the robot needs to be able to do a crowd analysis, conversational group analysis, or detect intention to interact. For a successful human-robot interaction, we need to pay attention to reliability, predictability, and safety. There are so many nuances when it comes to interaction and conversation; the robot needs to learn to generate co-speech gestures and maybe even have empathy.

The socials

Monday ended with a poster session on the top floor of the library. All the participants were rewarded with a wonderful view of the campus, plenty of drinks, and nibbles. There were around 30 posters on all sorts of topics. Gaussian splatting, generative AI, detecting violence from videos, and detecting seals from satellite images are just a few examples.

I had a few nice chats with people about my poster and even met a few others working on projects in the underwater environment! One of the other presenters is actually working on the classification of coral reefs! Overall, there were quite a few environmental use cases, which was great to see.

In the evening on Tuesday, there was another social, this time at the Maritime Museum. Not going to lie, I was incredibly excited to see the giant oil rig model and all the ships as well. I am happy a social was organized here as the day of lectures would normally finish at 5 pm when all the museums in Aberdeen would close, so it was really nice to get to see one anyway.

End of day three had yet another social, this time a dinner at the Beach Ballroom. The venue location was great as I finally got to see the local beach! At the start of the evening, the top 3 posters were announced here, and I won the second best prize! The announcement was followed by a lovely dinner (with sticky toffee pudding!) and some great chats about various projects and hobbies. I even found a whole group of board gamers, but unfortunately forgot my travel version of Catan at home.

Final impressions

This was an excellent week full of learning and sharing ideas. While all of the attendants study/work in CV, you’d find it quite challenging to find two people with extremely similar projects. This goes to show how incredibly broad the field of computer vision is. It is no easy task to put together lectures that would be equally interesting for everyone, but I think the organizers did a great job. I did talk to quite a few people working on CV for environmental use cases, so perhaps a talk on this topic would be a welcome addition.

The accommodation was a pretty standard student hall layout. No big issues, and my room came with a very nice birdwatching window to observe baby gulls and some oystercatchers too. The breakfast was somewhat funny, you can play a little game of find 5 differences below😁. I think after a week of these, I became somewhat obsessed with the jelly pot. General note on food, while I’m not a big fan of the conference-style buffets, the food options we got were excellent.

I fully recommend that everyone working in or studying computer vision attend this summer school. It is very well organised and probably one of the most advanced CV summer schools out there.